Back to Blog

The 'Wrong' Ad Won Big: How 7-Figure Brands A/B Test in 2025

Oct 14, 2025

15 min. read

Vivian

If you’re running at Shopify Plus scale, you already know the stakes. With ad spend in the millions, global traffic, and complex stacks, you can’t afford vanity experiments. A test that only tweaks a button or headline won’t move the needle. You need experiments that drive insights big enough to scale across markets.

That’s why the most successful brands don’t treat A/B testing as trial and error. They treat it as a system. They audit, hypothesize, execute, and iterate until results compound.

We spoke to fscales, a performance agency managing experimentation for Shopify Plus brands spending six and seven figures a month. In this interview, they break down:

▫️Why testing starts with an audit, not creative guesswork.

▫️How one “reverse psychology” concept beat direct response ads for a laundry brand.

▫️Why failure isn’t wasted effort, but hints at how and what to scale.

Expert take: What it means to run successful A/B tests on Shopify Plus

What types of Shopify Plus brands do you typically work with?

We usually work with Shopify Plus brands operating at a serious scale: supplements, health & wellness, beauty, and home goods are common categories. These brands are often spending six to seven figures a month on ads, so the expectation is different.

Every test has to deliver insight that can be scaled. Our role is to make sure that creative and CRO work together so those budgets aren’t wasted on guesswork.

How do you decide what to test first for a new client?

We never start with random ideas, but instead with an audit. Our process begins with precision, driven by data and sentiment; nothing else:

▫️ Audit ad metrics: We dissect platform-level ad data (Meta, TikTok) and identify which headlines, hooks, placements, and creative variants actually generate lift and which fall flat.

▫️ Extract customer language: We analyze product reviews and comments to discover real emotional triggers, recurring pain points, and unexpected motivations, straight from your shoppers.

▫️ Surface social signals: We mine Reddit threads and social conversations to grasp the unfiltered dialogue around the brand and category; those raw insights outperform traditional surveys.

▫️ Forge high-impact hypotheses: Instead of just tweaking the UI, we ask:

- Which narrative taps directly into a customer's belief system?

- Which phrasing mirrors how prospects actually speak about the brand?

Essentially, ignore the generic "what-if" tests and prioritize the highest-impact experiments because your time, like your ad budget, is too valuable to waste.

Can you walk us through your A/B testing workflow?

Our workflow always begins with the hypothesis, but that hypothesis is never random. Here’s how it works:

1️⃣ Data analysis: We build it out of hard data: ad performance metrics like thumb-stop rate, hold rate, and watch time, paired with qualitative insights from customer reviews and sentiment analysis.

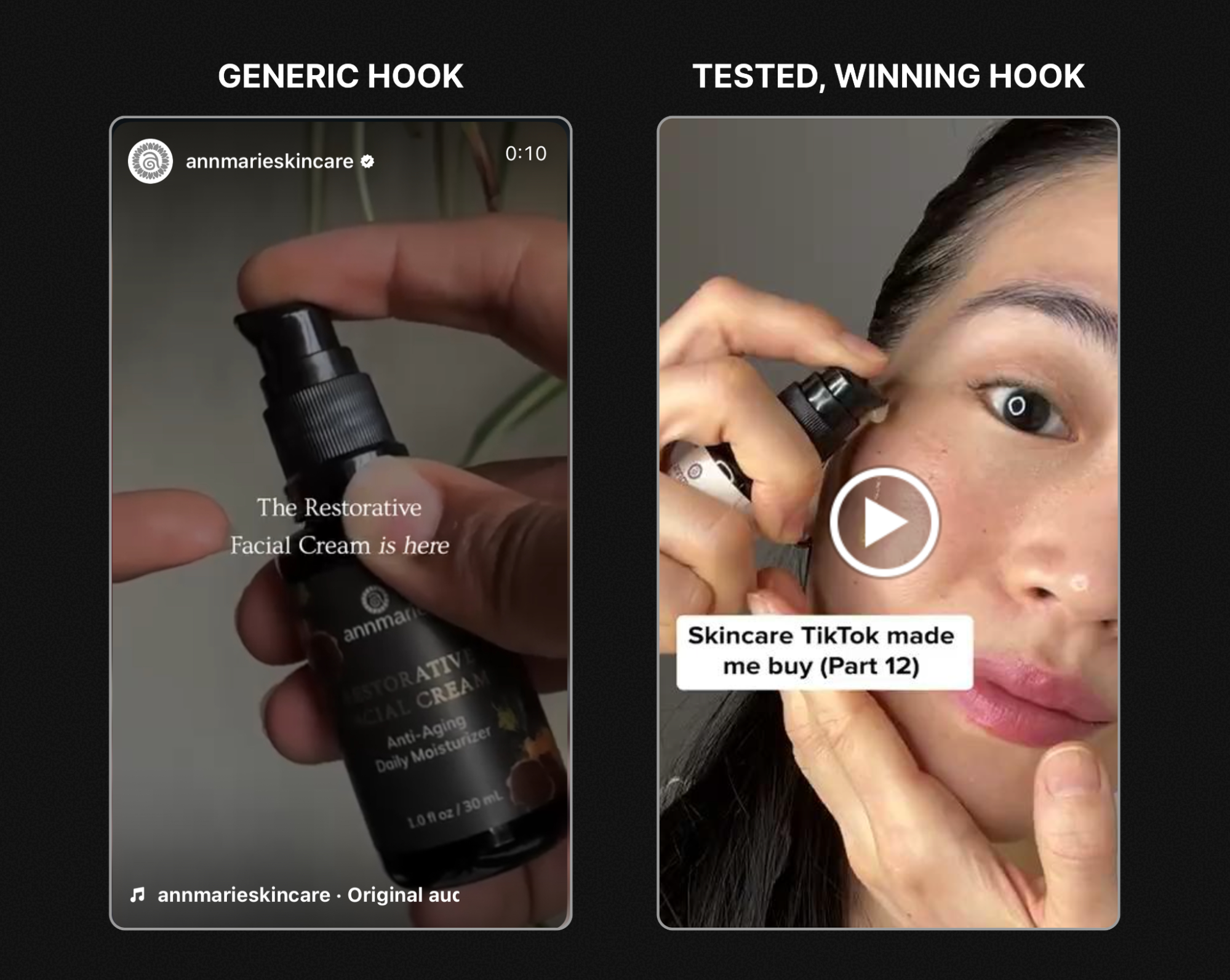

2️⃣ Variations: Once the insight is clear, we move into variant creation. Instead of cosmetic tweaks, we test meaningful creative shifts: two versions of a UGC video with different hooks, or scripts that lean into distinct customer pain points.

3️⃣ Implementation: This happens inside controlled campaigns on Meta or TikTok, tracked against precise KPIs like CPA and ROAS. We don’t just watch results; we dissect them in real time using AI-enhanced reporting tools. That lets us see not only which variant wins, but why it wins.

4️⃣ Iteration: Winning variants get refined into the next cycle. Losing ones aren’t discarded; they inform the next hypothesis.

That creates a continuous loop: insight → test → optimize → scale. It’s how every round of testing makes the next one stronger.

Can you share a surprising or high-impact test?

One of our most surprising results came from work with a home goods brand. We tested two UGC video concepts:

The first version used a standard direct-response approach: benefits, urgency, and a clear call to action. The second leaned on reverse psychology, using a negative emotional hook instead of pushing features.

Conventional wisdom would back the direct-response ad. But the reverse psychology variant outperformed it by a wide margin. Engagement was stronger, acquisition costs dropped, and the campaign reshaped our creative direction.

The insight was clear: emotional resonance outpaces rational persuasion. By tapping into unexpected emotions, we gained access to a growth channel no amount of polished messaging had revealed.

How do you handle failed or inconclusive tests?

We don’t treat failed or inconclusive tests as wasted effort, but we treat them as data. If a result comes back inconclusive, we dig into the metrics first.

Was there a messaging to audience mismatch, external noise, or confounding factors?

If it’s truly inconclusive, we refine the hypothesis and re-run it with tighter variables.

When a test outright fails, we pivot quickly. The insight feeds back into the creative brief, and we apply it to the next round.

That iteration cycle (test, learn, adjust, re-test) is what keeps us moving faster.

Every failure removes one wrong path and sharpens where we go next.

What features matter most in a testing platform?

The most successful and effective platforms are the ones that keep experimentation fast, data-rich, and collaborative. We look for:

▫️ Robust data integration to track conversions in real time across the funnel.

▫️ AI-driven analysis that can break down what’s working in creative, both text and visuals.

▫️ Automated variant management with clean splitting and statistical significance built in.

▫️ Collaboration tools that keep strategists, editors, and creators aligned in one shared workflow.

Essentially, what is important is that the platform doesn’t slow us down. It needs to support proactive optimization, where we can launch, monitor, and iterate without friction.

What’s the most valuable test you’ve run?

One of our most valuable tests came from a legal weed brand. Instead of sticking to the usual benefit-driven hooks, we developed an “objection-buster” concept.

We mined their reviews and social comments to identify recurring pain points like questions about taste, legitimacy, and efficacy. Then we built a creative that addressed those objections head-on. With that, we tested it with a sarcastic angle and in an Instagram-style organic format.

The objection-buster variant outperformed everything else.

By speaking directly to customer doubts, we drove conversions and unlocked a massive spike in new customer acquisition that we doubled down on for sustained revenue growth.

The lesson is that tackling objections openly can be far more powerful than reiterating benefits customers already expect.

What buyer behavior trends influence strategy?

The biggest shift has been in attention and trust. Attention spans are shorter than ever, and polished ads don’t hold weight the way they used to. Buyers are skeptical of being sold to. They want content that feels authentic, like how they’d talk about the product themselves.

That’s why we lean into UGC, raw social proof, and conversational hooks. Instead of forcing traditional ad copy, we mirror the customer’s own language: what they write in reviews, comments, or threads.

Creative is now the targeting. If the message resonates, it finds the right audience faster, which is then accelerated by the algorithm.

What’s the most over-hyped trend in ecommerce testing?

The biggest hype right now is around AI-generated creatives that promise ‘instant winners.’ The idea that you can plug in a prompt and get ads ready to scale ignores the reality of brand nuance.

AI is valuable and we use it heavily for analysis and reporting, but it can’t replace human judgment. When brands rely on templated outputs without iterative testing, they end up with content that looks generic and fails to connect.

Sustainable ROI comes from AI along with human oversight, not through shortcuts.

What tools and tech stack do you use?

Our stack is built to cover the full testing loop, from launch to insight to iteration:

▫️ Meta Ads Manager & TikTok Ads Manager for setup and deployment.

▫️ Google Analytics, TripleWhale, and NorthBeam for cross-funnel tracking.

▫️ AI-powered analysis tools that break down performance by creative element (copy, visuals, context).

▫️ Slack & ClickUp for internal collaboration and fast feedback loops.

▫️ Frame.io for streamlined creative reviews across teams.

▫️ Google VEO, Icon AI Ads Maker, and Canva AI as AI-powered creative production assistants for our designers and editors.

Together, this makes sure that every test is measurable, trackable, and actionable. Nothing gets lost between hypothesis, execution, and optimization.

Actionable takeaways on running A/B tests

✅ Start with an audit, not a hunch.

Mine ad data, reviews, and social sentiment before you run a single test. Hypotheses rooted in customer signals always outperform guesswork.

✅ Test for impact, not cosmetics.

Skip button colors. Focus on narratives, hooks, and objections that actually influence purchase decisions.

✅ Build hypotheses from data and sentiment.

Every test starts with hard numbers (conversion rate, thumb-stop rate, watch time) paired with what customers are actually saying.

✅ Treat failures as inputs.

Inconclusive or losing tests still deliver insight. Feed them back into briefs and re-run with sharper variables.

✅ Emotion outperforms logic.

Reverse psychology hooks and objection-busting creative often beat direct-response ads because they resonate more deeply.

✅ Creative is the new targeting.

Use UGC, raw social proof, and customer-mirrored language to cut through shrinking attention spans and skepticism.

✅ Balance AI with human judgment.

AI is powerful for analysis and reporting, but sustainable ROI comes from pairing it with human insight and iteration.

✅ Invest in the right full-funnel tech stack.

Launch with Ads Manager, track with TripleWhale/NorthBeam, analyze with AI, build creatives with Google VEO and Canva AI, and align the team with Slack, ClickUp, and Frame.io so nothing gets lost from hypothesis to optimization.

Is your experimentation process built to scale?

For Shopify Plus brands, A/B testing is more than just surface visual changes. It’s about turning customer signals into structured hypotheses, running disciplined experiments, and scaling the creative that drives real revenue.

The challenge is that a lot of platforms can’t keep up with how fast high-volume brands need to test. Data lives in silos, collaboration breaks down, and insights arrive too late to matter.

That’s exactly why we built ABConvert, a platform designed for experimentation at Shopify Plus scale. With it, you can:

- Manage A/B tests with automated splitting and statistical rigor.

- Connect data across your funnel in real time.

- Use AI to analyze creative performance and understand why a variant wins.

- Align teams with shared workflows from hypothesis to iteration.

Start your 14-day free trial with ABConvert and see how we can power your experimentation stack.

Another great article from us

.png)

Aug 12, 2025

5 min. read